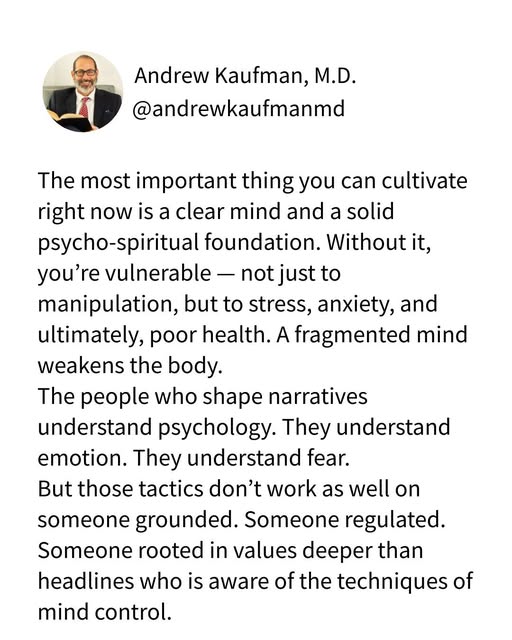

Immunize Yourself Against Control Techniques

It Was Never About The Big Things

My take on this is that if you do the small things right, and enjoy them, the big things are more likely to follow and persist.

Quote of the Day

“The journey of a thousand miles begins with one step.”

Lao Tzu – Philosopher (604 – 531 BC)

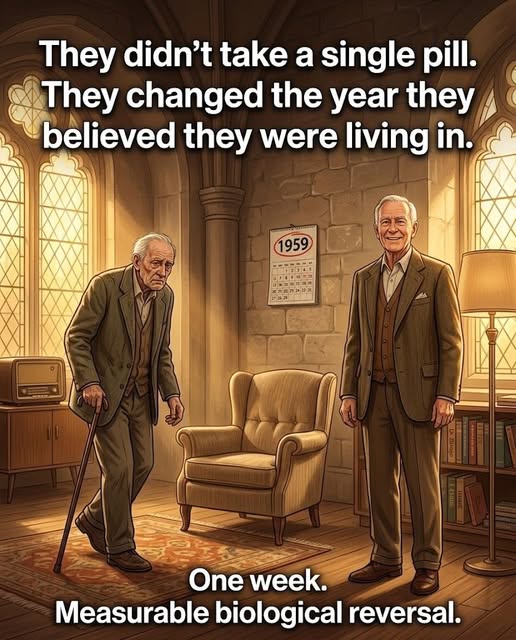

Can You Think Yourself Younger?

In 1979, Harvard psychologist Ellen Langer brought a group of men in their late 70s and 80s to a monastery retrofitted to look like 1959. No mirrors. No current photos. They spoke about Eisenhower in the present tense. They were told to inhabit their younger selves completely.

One week later the results were biologically confusing. Their joints were more flexible. Their grip strength increased. Their vision improved. Arthritic fingers actually lengthened as inflammation subsided. Independent observers judged their “after” photos to look significantly younger.

On the final day, these men who had arrived frail and dependent were playing touch football on the front lawn.

We think of aging as a one way street where parts wear out and systems fail. But what if the body is simply following instructions? If you tell the mind it is 1959, the body does not check the calendar. It listens to the mind.

Your body is eavesdropping on every signal you send it. What are you telling it today?

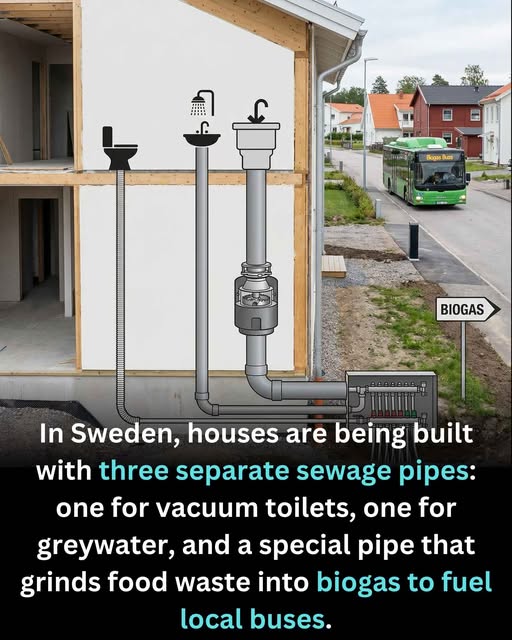

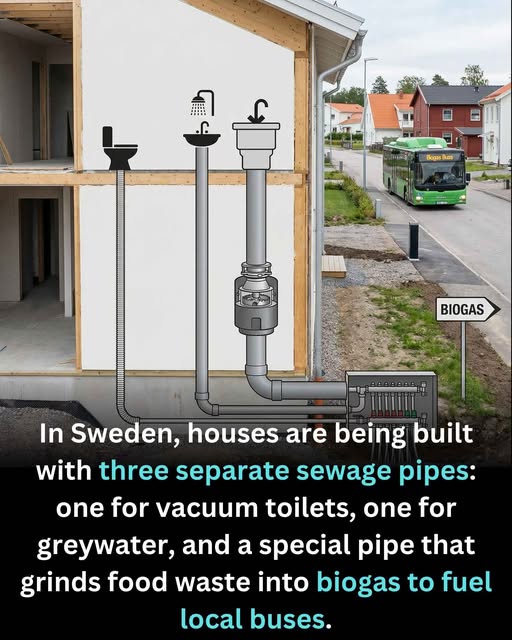

A New Waste Treatment

Jacob Collier Improvises with Orchestra Live in San Francisco

Masterful! Brilliant! Moving! A must watch/listen!

Click to view the video: https://www.facebook.com/watch?v=1599635021060206

Quote of the Day

We are faced with the paradoxical fact that education has become one of the chief obstacles to intelligence and freedom of thought.

Bertrand Russell

Quote of the Day

Quote of the Day

“The reward of a thing well done is having done it.” – Ralph Waldo Emerson, Poet (1803 – 1882)